Neural Keyword Spotting on LibriBrain

End-to-end walkthrough for Neural Keyword Spotting (KWS) on the LibriBrain MEG corpus: load data, frame the task, train a compact baseline, and evaluate with precision–recall metrics tailored to extreme class imbalance.

Introduction

Note: This tutorial is released in conjunction with our DBM workshop paper “Elementary, My Dear Watson: Non-Invasive Neural Keyword Spotting in the LibriBrain Dataset”

. The tutorial provides a comprehensive introduction as well as a hands-on, pedagogical walkthrough of the methods and concepts presented in the paper.

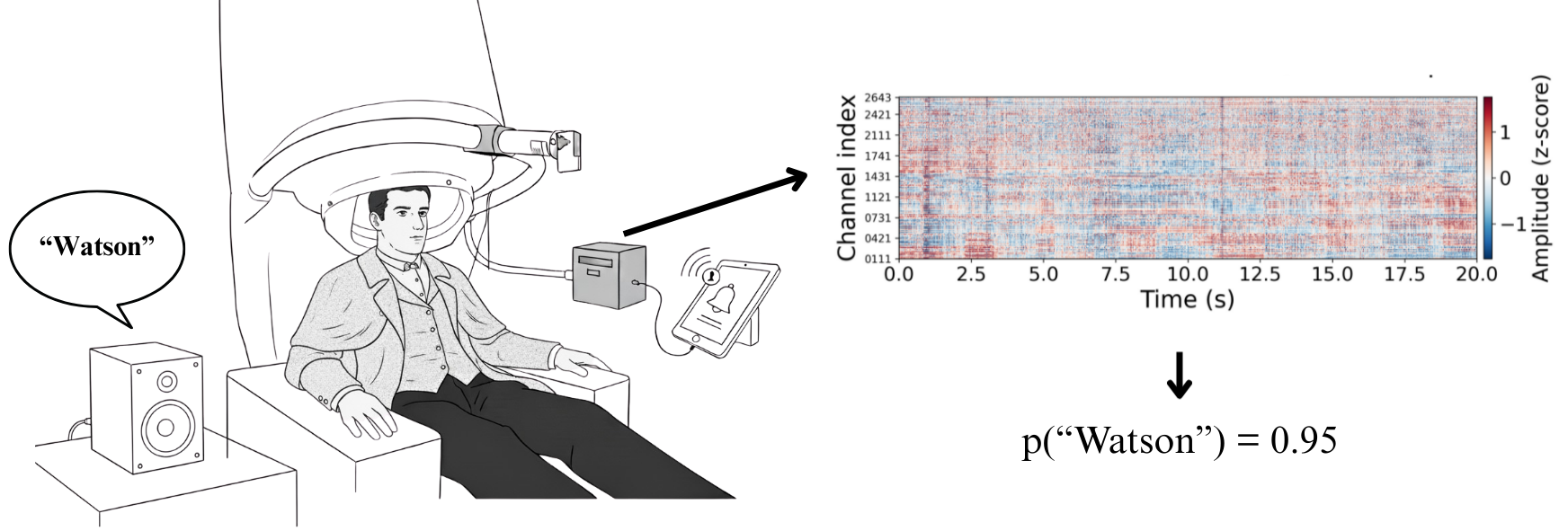

Neural Keyword Spotting (KWS) from brain signals presents a promising direction for non-invasive brain–computer interfaces (BCIs), with potential applications in assistive communication technologies for individuals with speech impairments. While invasive BCIs have achieved remarkable success in speech decoding

This tutorial demonstrates how to build and evaluate a neural keyword spotting system using the LibriBrain dataset

Motivation and Context

Why Keyword Spotting?

Full speech decoding from non-invasive brain signals remains an open challenge. However, keyword spotting—detecting specific words of interest—offers a more tractable goal that could still enable meaningful communication. Even detecting a single keyword reliably (a “1-bit channel”) could significantly improve quality of life for individuals with severe communication disabilities, allowing them to:

- Answer yes/no questions

- Signal alerts or requests

- Control devices through specific command words

- Maintain basic communication when other channels fail

The Challenge: Rare Events in Noisy Data

Keyword spotting from MEG presents two fundamental challenges:

-

Extreme Class Imbalance: Even short, common words like “the” represent only ~5.5% of all words in naturalistic speech. Target keywords like “Watson” appear in just 0.12% of word windows, creating a severe imbalance.

-

Low Signal-to-Noise Ratio: Unlike invasive recordings with electrode arrays placed directly on the cortex, non-invasive MEG/EEG sensors sit outside the skull, capturing attenuated and spatially blurred neural signals mixed with physiological and environmental noise.

These challenges require specialized techniques, which we cover in this tutorial.

Dataset and Methodology

The LibriBrain Dataset

The LibriBrain dataset

Task Formulation

We frame keyword detection as event-referenced binary classification:

- Input: MEG signals (306 channels × T timepoints) windowed around word onsets

- Output: Probability p ∈ [0, 1] that the target keyword occurs in this window

- Window length: Keyword duration + buffers (pre-/post-onset)

This differs from continuous detection by:

- Focusing on word boundaries (where linguistic information peaks)

- Avoiding the combinatorial explosion of sliding windows

- Leveraging precise temporal alignment from annotations

Data Splits: We use multiple training sessions and dynamically select validation/test sessions based on keyword prevalence to ensure sufficient positive examples in held-out sets.

Model Architecture

The tutorials baseline model addresses the challenges through three components:

Note: The notebook first demonstrates individual components with simplified examples (e.g.,

ConvTrunkwith stride-2), then presents the full training architecture below.

1. Convolutional Trunk

The model begins with a Conv1D layer projecting the 306 MEG channels to 128 dimensions, followed by a residual block

self.trunk = nn.Sequential(

nn.Conv1d(306, 128, 7, 1, padding='same'),

ResNetBlock1D(128),

nn.ELU(),

nn.Conv1d(128, 128, 50, 25, 0), # stride-25 downsampling

nn.ELU(),

nn.Conv1d(128, 128, 7, 1, padding='same'),

nn.ELU(),

)

2. Temporal Attention

The trunk output is projected to 512 dimensions before splitting into two parallel 1×1 convolution heads: one producing per-timepoint logits, the other producing attention scores. The attention mechanism

self.head = nn.Sequential(nn.Conv1d(128, 512, 4, 1, 0), nn.ReLU(), nn.Dropout(0.5))

self.logits_t = nn.Conv1d(512, 1, 1, 1, 0)

self.attn_t = nn.Conv1d(512, 1, 1, 1, 0)

def forward(self, x):

h = self.head(self.trunk(x))

logit_t = self.logits_t(h)

attn = torch.softmax(self.attn_t(h), dim=-1)

return (logit_t * attn).sum(dim=-1).squeeze(1)

3. Loss Functions for Extreme Imbalance

Standard cross-entropy fails under extreme class imbalance. We employ two complementary losses:

-

Focal Loss

: Down-weights easy negatives by $(1-p_t)^\gamma$, with class prior $\alpha=0.95$ matching the <1% base rate. This prevents “always negative” collapse. -

Pairwise Ranking Loss

: Directly optimizes the ordering of positive vs. negative scores, improving precision-recall trade-offs:

def pairwise_logistic_loss(scores, targets):

pos_idx = (targets == 1).nonzero()

neg_idx = (targets == 0).nonzero()

# Sample pairs and penalize inversions

margins = scores[pos_idx] - scores[sampled_neg_idx]

return torch.log1p(torch.exp(-margins)).mean()

Training Strategy

Balanced Sampling: We construct training batches with ~10% positive rate (vs. natural <1%) by:

- Including most/all positive examples

- Subsampling negatives proportionally

- Shuffling each batch

This ensures gradients aren’t starved by all-negative batches while keeping evaluation on natural class priors for realistic metrics.

Preprocessing: The dataset applies per-channel z-score normalization and clips outliers beyond ±10σ before feeding data to the model.

Data Augmentation

- Temporal shifts: randomly roll each sample by ±4 timepoints (±16ms at 250 Hz)

- Additive Gaussian noise: σ=0.01 added to normalized signals

Regularization: Dropout (p=0.5), weight decay

Evaluation Metrics

Traditional accuracy is meaningless under extreme imbalance (always predicting “no keyword” yields >99% accuracy). We employ metrics that reflect real-world BCI deployment:

Threshold-Free Metrics

Area Under Precision-Recall Curve (AUPRC)

- Baseline equals positive class prevalence (~0.001 for “Watson” on the full dataset, ~0.005 on the test set chosen to maximize prevalence)

- Aim for 2–10× improvement over chance

- More informative than AUROC under heavy imbalance

Precision-Recall Trade-off:

- Precision: Fraction of predicted keywords that are correct (controls false alarms)

- Recall: Fraction of true keywords detected

User-Facing Deployment Metrics

False Alarms per Hour (FA/h):

- Practical constraint: target <10 FA/h for usability

- Computed as:

(False Positives / total_seconds) × 3600 - Evaluated at fixed recall targets (e.g., 0.2, 0.4, 0.6)

Operating Point Selection: Choose threshold on validation to meet FA/h or precision targets; report test results at that fixed threshold.

Performance Interpretation

- Chance: Prevalence (% of words matching the keyword)

- 2–5× Chance: Modest but meaningful improvement

- >10× Chance: Strong performance for this challenging task

Computational Requirements

- GPU: Google Colab free tier (T4/L4 GPU) sufficient

- Training Time: ~30 minutes for the baseline on default configuration

- Memory: <16 GB GPU RAM with batch size 64

-

Dataset: Automatically downloaded by the

pnpllibrary (~50 GB for the full set, ~5GB for the default subset)

The tutorial is designed to run on consumer hardware by training on a subset of data. To scale to the full 50+ hours of data, increase training sessions in the configuration and use a higher-tier GPU (V100/A100).

Learning Goals

By working through this tutorial, you will:

- Frame KWS from continuous MEG as a rare-event detection problem with event-referenced windowing

- Handle extreme class imbalance through balanced sampling, focal loss, and pairwise ranking

- Build a lightweight temporal model (Conv1D + attention) trainable on consumer GPUs

- Evaluate with appropriate metrics: AUPRC, FA/h at fixed recall, precision-recall curves

- Understand trade-offs between sensitivity (recall), false alarm rate, and practical usability

- Gain hands-on experience with a real-world non-invasive BCI dataset

Tutorial Structure

The accompanying Jupyter notebook provides a complete, executable walkthrough:

- Setup & Configuration — Install dependencies, configure paths and hyperparameters

- Dataset Exploration — Inspect HDF5 files (MEG signals) and TSV files (annotations)

- Problem Formulation — Visualize challenges (class imbalance, signal noise)

-

Model Components — Interactive demos of each architectural component:

- Convolutional trunk (spatial-temporal processing)

- Temporal attention (adaptive pooling)

- Focal loss (imbalance handling)

- Pairwise ranking (order-based training)

- Balanced sampling (batch composition)

- Training — Full training loop with PyTorch Lightning, early stopping, logging

- Evaluation — AUPRC, ROC, FA/h curves, confusion matrices, threshold analysis

- Next Steps — Suggested experiments (different keywords, architectures, augmentations)

Notebook Access

Access the full interactive tutorial:

Links:

- Interactive (Colab): Open in Google Colab

- Source (GitHub): View on GitHub

- Workshop Paper: arXiv:2510.21038

- LibriBrain Dataset: View on HuggingFace

Requirements: A Google account for Colab, or local Jupyter Notebook install with Python 3.10+

Besides the accompanying workshop paper